soundgarden

Soniferous Gardens

“A garden is a place where nature is cultivated. It is a humanized treatment of landscape. Trees, fruit, flowers, grass are sculpted organically from the wilderness by art and science. Sometimes the garden is drastically clipped and manicured, as in the harshly classical gardens of Versailles and Vienna; elsewhere man has restricted his touch to assisting certain characteristic features of the landscape to flourish. A true garden is a feast for all the senses.” - R. Murray Schafer, The Soundscape, p. 246.

Creating a soniferous garden, an “acoustically designed park” (Schafer, p. 247), requires awareness and understanding of the given immediate sonic environment. Its acoustic qualities stem from a manifold range of features, including geometry, materials, time of day, weather, and so on. In principle, we can model and control these features using modern game engines, which is exactly what I will do in this project.

This project is an attempt to adapt the concept of the soniferous garden into a shortform videogame, while also testing out some hypotheses from music-emotion research regarding the effect of certain musical structures on emotion.

Adapting the concept

A quick Google search on “soniferous garden video games” lead me to this video of a VR installation:

According to the artist’s website, the project features field recordings from the Yellowstone and Badlands National Parks paired with generative music. I find it interesting that the artist incorporated generative music, although the reasoning behind it is not mentioned. However, a case for generative music in a virtual adaptation of a soniferous garden can certainly be made if we look at Schafer’s examples, where he explicitly mentions water organs and wind harps. I will interpret these as forms of generative music machines, powered by the elements, where water and wind become the streams of data that generate an ever-changing flow of music. Therefore, the idea of incorporating generative music into a virtual soniferous garden is reasonable. (On this note, it would be interesting to conduct a deeper investigation into soniferous gardens in video games/soniferous gardens as video games in the future.)

Running into a (game) jam

Originally I had planned to put together a simple proof of concept, developed over a weekend in game jam style. I wanted to:

- Upgrade my Procedural Music System

- Build a game design around the music system

This did not work out as planned. I simply put too much on my plate here, trying to do two major things at the same time. Also, I realized it had been a while since I created a game from scratch. For the last two years I had been mostly involved in developing ongoing projects. I’ve become rusty. I have to make sure that all required tools are ready to use during the jam. As one of the two main tasks is also one of the fundamental tools, I now understand I need to focus on the tool first. My goal is to work on it until it can generate ‘pleasant-sounding’ music.

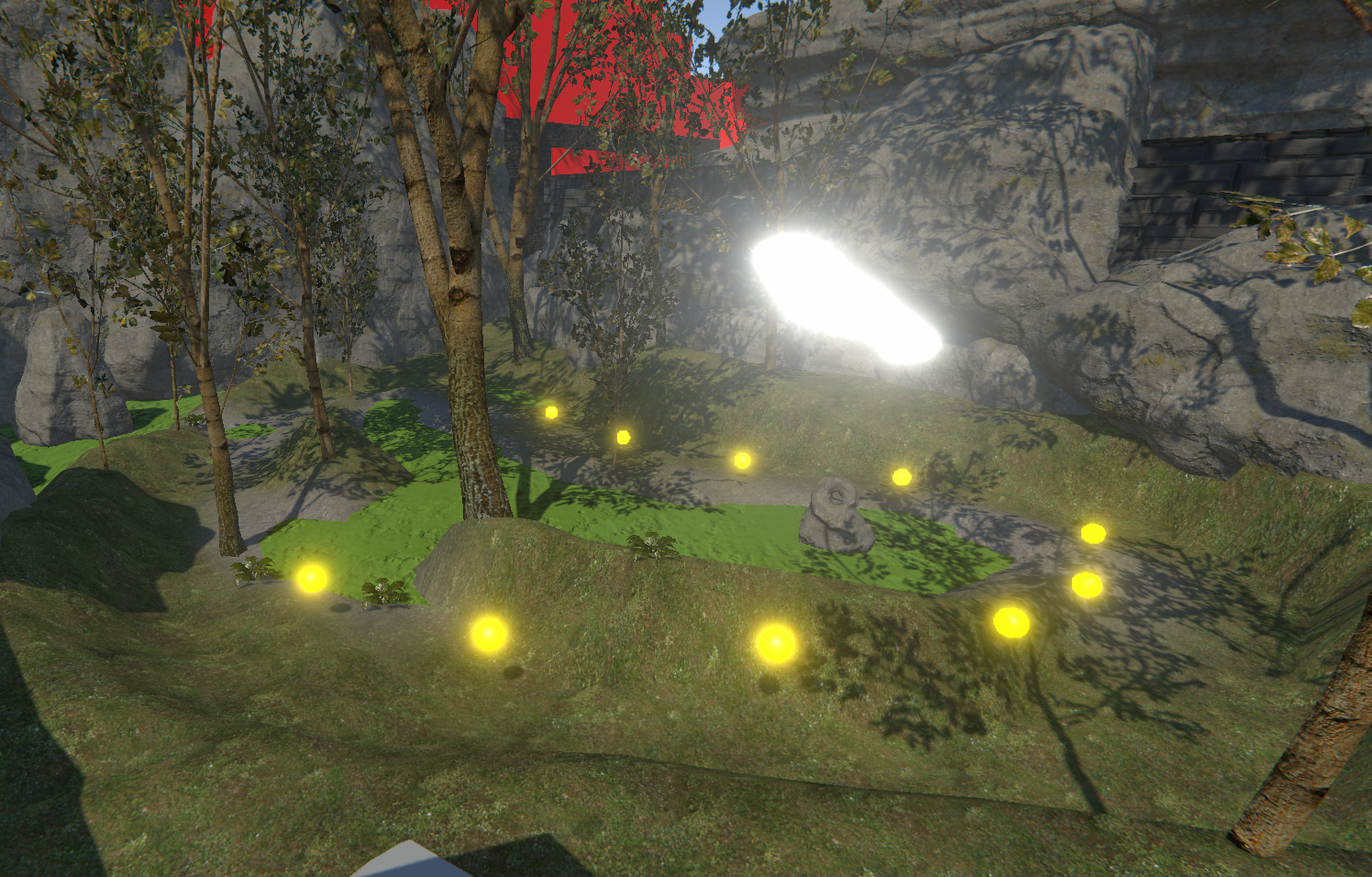

Nevertheless, here is a screenshot of the first attempt:

Musical Pac-Man? 🤔

Musical Pac-Man? 🤔

Research

I watched this video on the soundtrack of Mini Metro, showing how the game state and game events can be translated into musical pieces that are both pleasant and meaningful.

Meaningful because the resulting music is a representation of what is happening the game: Trains moving between stations, new stations popping up; the tempo of the music is derived from the length of an in-game hour. These are only a few examples, all providing inspiration for this project regarding adaptive and generative music techniques.

FRACT OSC was a big inspiration for me and put me on the trajectory of making games and making music with/in games. Therefore, it made sense to take a look at it for this project. One object seemed especially interesting to me in the context of the soniferous garden:

This beam of light feels alive. It has a pulse that triggers movement and sound, and it reacts to you with a filter sweep, as you move closer to and away from it. I would like to have something similar to that in my garden.

Building the music system

I am using ProcMu, a procedural music system I built for my master’s thesis, as a foundation for the music system in this project. This provides me with a basic structure that I plan to improve on by adding more sophisticated generative algorithms. Instead of CSound, which ProcMu originally used for music sequencing and sound generation, I am now using a Unity plugin called AudioHelm. This removes the step of translating parameters and sending them to CSound. Further, AudioHelm comes with a versatile synthesizer that can produce some decent quality sounds.

I will add documentation for the music system in this project as I work on it. Until then, here is the documentation of my master’s project which contains information about the different components of ProcMu, many of which also will be found in the upgraded version.

More about the development of the music generators to come in a future entry.

Upcoming

Next steps:

- Finish implementing generative algorithms

- Design distinctive sound settings for each area/music zone

Further design considerations:

- Synchronize footstep sounds with the sequencer. Does the player then also become a part of the soniferous garden? Would be interesting from both game design and theoretical perspectives.

- Place dynamic sound objects in the environments, possibly also synchronized with the music, similar to the example in FRACT OSC above.

- Create a music-emotion interface that maps valence/arousal to music configurations, each representing an emotion on Russell’s scale.