soundgarden

Recap

What I did since last time:

- Populated the world with assets

- Refined the gameplay

- Designed more musical elements

- Connected gameplay to generative music parameters

- Further developed the area-specific sounds

- Conducted research into soundscapes and acousmatics

Iterating on gameplay

The original gameplay loop is as follows: An object is hidden in the environment. Whenever the player finds and touches it, the object moves to another location, waiting to be found again. As a pseudocode-loop it would look something like this:

while(true){

Game.SpawnObjectAtRandomLocation();

while(!Player.HasFoundObject()){

if(Player.HasFoundObject())

break;

}

}

This mechanic aims to give the player an incentive to move through the game environment. There is no time limit to find the object, allowing players to engage with the garden at their own pace. I also consider adding an option for players to disable the hidden object game.

Moving around and looking for an object is all the player does. This seems quite boring to me. Even though I hope that generative music will make gameplay less boring, I’m afraid this might not be enough to be engaging.

I ended up replacing a glowing collectable orb with stones that blend into the environment. Because of their smaller size and ability to be hidden in plain sight, the player will likely need to pay more attention to their surroundings. This also provides an opportunity to employ the affective qualities of the generative music. Because the rocks are not easily visible from afar, musical guidance becomes more important. When the player gets closer to the object to find, the music could turn to a style designed to slow them down and look more closely. Also, I’m planning to add a deliberate ‘pick-up’ action, so the player does not accidentally collect an object by walking over it, without having actually consciously found it.

Barriers

I realized that giving the player access to all parts of the island from the beginning might be detrimental when it comes to finding small objects that are scattered around.

Instead, I implemented barriers that disappear one after another, depending on how many objects the player has found. This way, players are introduced to the environment gradually, which might help facilitate stepwise familiarization with the different parts of the map. Also, this will give a purpose to collecting the collectibles.

Expanding the accessible area will also expand the area where the collectible can spawn. I’m wondering whether to remain with spawning one collectible at a time, or several (maybe even lots??…). It could make sense to have several objects concurrently, also considering they are quite small. Finding the needle in a haystack doesn’t seem like a fun activity to me. However, if there is more than one collectible, then where should the music system guide the player? To the closest collectible? If we have lots of rocks, the general area where most collectibles are?

Once the player has access to all parts of the map, the stones could be used for something else. For example, maybe the player can thrown them in the ocean, once they gain access to the beach. Maybe there could even be specific actions for each area?

Mapping

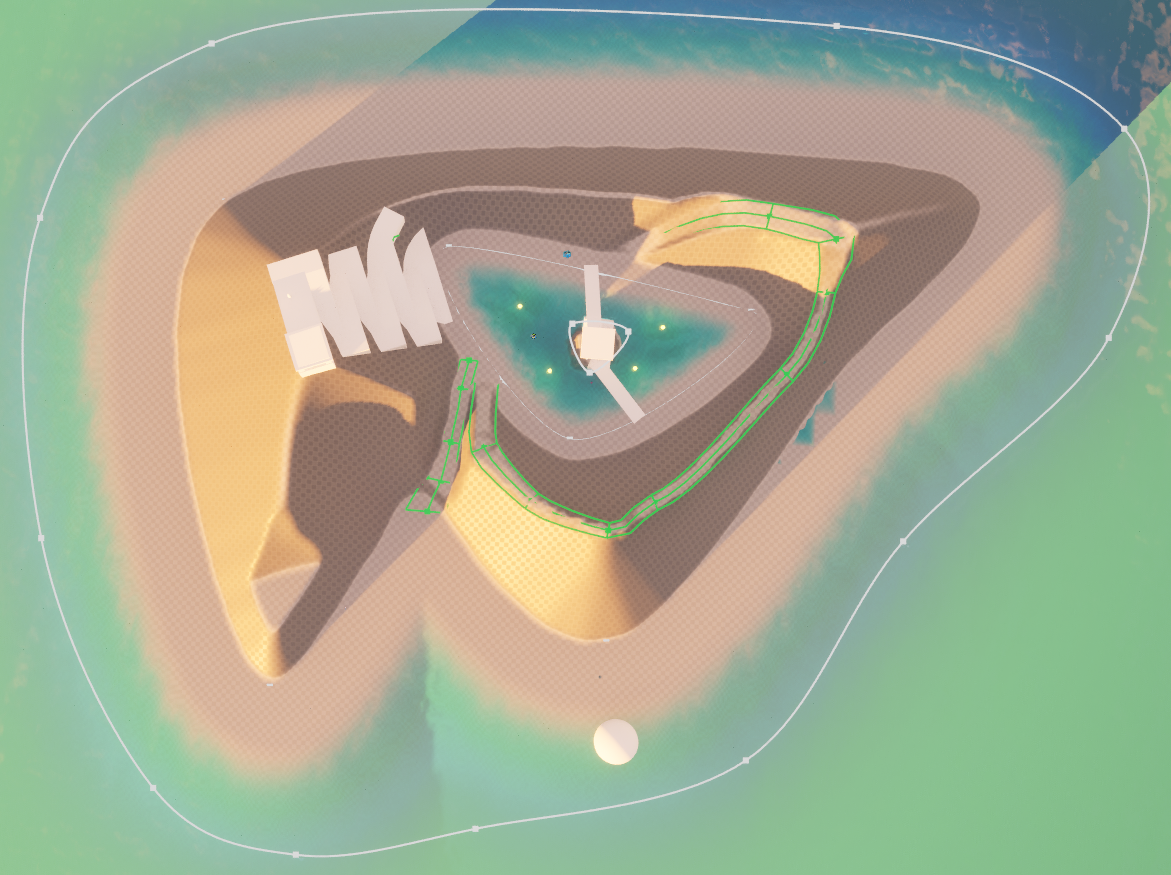

When I switched the project over to Unreal, I had to come up with a new map. It still follows the same concept, however, Unreal’s spline-based mapping tools help achieving a generally smoother outcome. Hoping that tinting the world in a certain style would inspire some musical ideas, I focused on the visual design.

The unity map had a swamp in it. In this new version, it became a sea cove since it was easier to set up with Unreal’s water body system and I didn’t have any suitable assets anyway.

However, once I had figured out Unreal some more, and found a suitable free asset pack, the swamp returned.

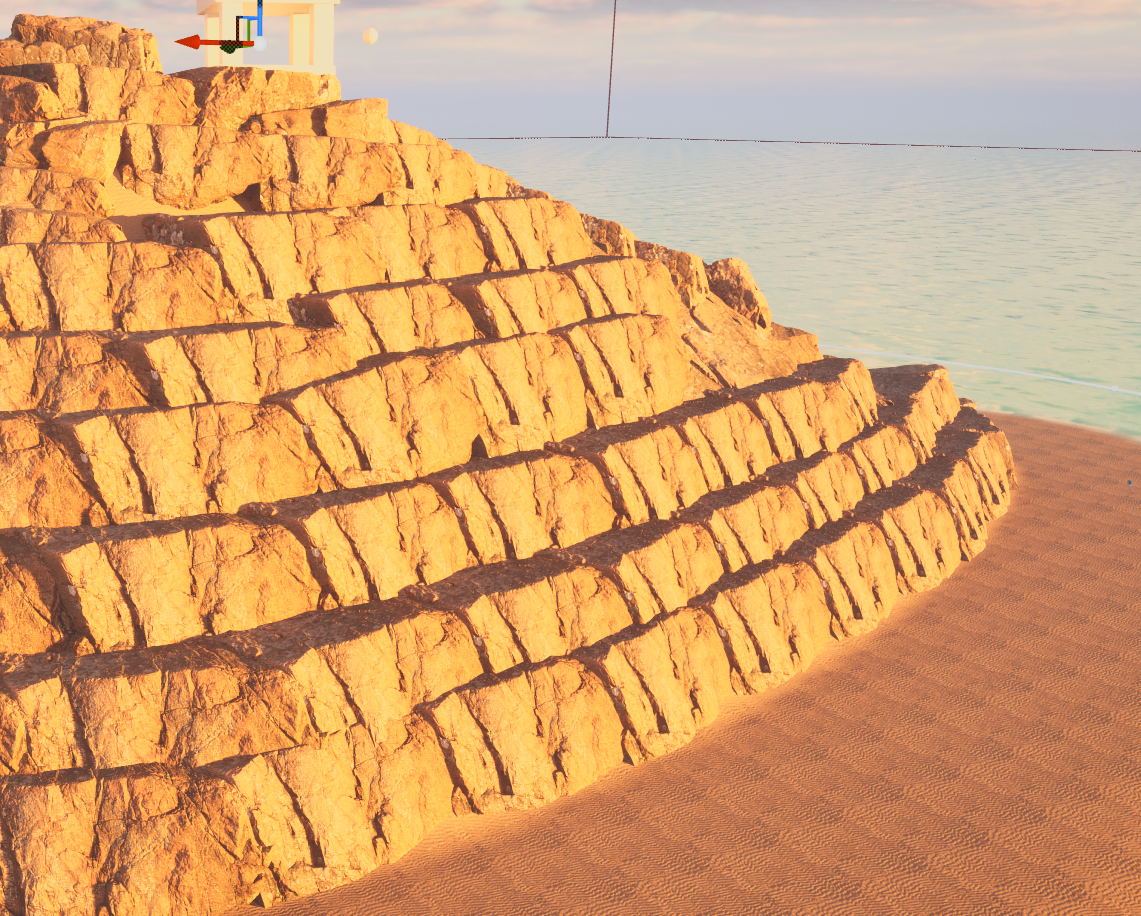

All the rock geometry in the map comes from a single asset, in various positions, scales, and rotations. At first, my dressing up of the terrain looked like blatantly obvious copypasting, which I aim to either fix or cover up with some vegetation.

As my technique was improving throughout the process, however, things ended up looking much smoother. Notice the difference between the picture above, or below on the left side, where I had started, and the rocks below on the right, where I finished covering the island.

If I were to use a texture mapping technique that depends on the world position of the object, and possibly uses some form of randomization, I can make the texture repetitions disappear, and the asset repetitions less obvious. At the end of the day, it’s just shapes.

When mapping, I like to consider the the logic of the environment. How did the water get into the center of the island? How does it stay there?

I’m imagining that occasional rainfall, along with a rock structure that isolates the body of water in the center of the island from the sea lead to the emergence of the swamp. Of course, the shape of the island and the distribution of the objects and paths may suggest an influence beyond the natural.

So let this island exist at the threshold between the real and the magical (which is where games operate anyway, right?). Maybe that’s something I can play with in future iterations. Thinking of colorful fog and psychedelic weather phenomena…. Oh and maybe a day/night cycle…

Simplifying the sound setup

Since the beginning of the project, I was struggling with controlling the overall musical outcome. My approach was to have music configurations, i.e., sets of parameters, that would define how music would be generated in each area of the game world. This proved to be difficult, since there are many parameters. Also, I’m not familiar with Unreal’s GUI building tools enough to implement a complex UI. The solution? Influence individual generative music parameters, rather than all of them at once. Components of the system should be able to influenced and influence each other without relying on some monolithic master structure.

Beyond that, I decided to use some pre-recorded or -composed elements, to aid with generating a more coherent and possibly pleasant-sounding outcome, without having to worry too much about the complex task of coming up with a fully generative solution. This also opens up the possibility of high quality samples that could act as a ‘filler’ in the music, bridging the gaps between sounds while increasing overall sound quality. I am still implementing generative approaches wherever it makes sense without proving to be a technical burden and might exchange non-generative elements with generative ones as I work more on the music.

I have also looked into mapping player actions to variables which can then be used as parameters to control the music system. The game should check whether the player is walking to switch between “walking” and “resting” themes. Such themes could come in form of specific drum patterns, activation of some instruments, or by affecting multiple generative parameters in order to generate music that sounds more intense.

Avenues of music-emotion research

What does it mean to have ‘intense’ music? Thinking about how to use music to affect player behavior, I turned to music-emotion research in order to find initial approaches.

I’m using Russell’s Circumplex Model of Affect, where emotions are defined by valence, i.e., positivity (happy vs. sad), and arousal, i.e., strength. By musical intensity, I refer to a combination of valence and arousal. This simplification is informed by the fact several studies in music-emotion research showed increases/decreases in both valence and arousal dimensions triggered by a single feature. For example, research showed that a staccato articulation (many short notes) would yield both a perceived higher valence and higher arousal response vs. legato articulation (long, connected notes). I will use research such as the one linked as guidelines for designing the music to purposefully affect the player’s emotions and behavior, aiming to help them find the collectibles quicker.

On working with MetaSound

One of my approaches in developing sounds is to design and ear-test melodic and rhythmic patterns, as well as sound designs in external software and then recreate them in the engine. While Unreal’s MetaSound offers some capacity to design sounds in real-time, changing the connections between modules, adding/removing modules, or introducing new variables will prompt a reset of the music system and interrupt audio playback. Designing sounds in real-time without interruptions and reloads within MetaSound requires the setup to be mostly complete, using float variables as inputs, as Unreal allows for these to be changed via knobs in real-time.

MetaSounds is quite similar to a Eurorack system, or at least, this is how I see it. This view allows me to approach a musical task in a technical setting while remaining within a musician’s mind set.

When it comes to (electronic) music there is a tradeoff between working with hardware and software. With hardware it’s much easier to design a sound. Turning a real knob is more immediate than using the mouse to click and turn a virtual one. The tradeoff, however, goes both ways. With MetaSounds, whenever I find myself in need of a certain sound, I can build the module to generate it, something I often do whenever the need for a particular function or sound arises. With Eurorack hardware, I can’t just solder together a new module and buying modules can be prohibitively expensive!

Upcoming

Next steps

- Create more connections between player behavior/game state and music parameters

- Add more variation to the music

- Theory: situate my approach of combining natural/musical sounds within the soniferous garden and acousmatics

- Develop a plan to test and evaluate the project