soundgarden

Mapping things out

The picture above shows the first draft of the game environment, an island with three distinct areas:

- Swamp (middle, green)

- Labyrinth (bottom right, blue)

- Hill (top left, red)

The player is confined to these three areas. It is not possible for them to wander the empty spaces near the beaches. The idea was to use height differences to maximize the distance between areas while keeping the footprint small and paths short. Further, I hope to create a sense of scale and make the map appear larger than it actually is. Another option would be to go fully procedural, generating a map, placing points of interest randomly. This is still a possibility for the future.

Since this soundscape-based game takes place on an island, the player should have some way of interacting with the sound of the waves. Therefore, in the second version of the map, I removed the labyrinth area and walls, making the beaches accessible.

Configuring music

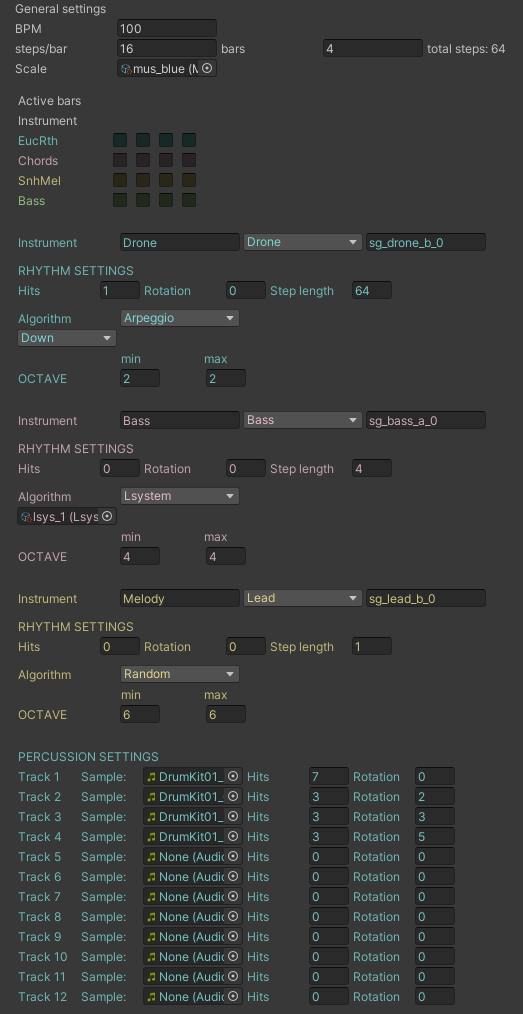

Below you can see the music configuration object I created in Unity, holding various generative parameters.

The generation of rhythm and melody is currently separated from each other. Rhythm, or, when a note is played is generated using a Euclidean algorithm . For the melodic part, i.e., pitch, I implemented several approaches that I aim to test in terms of output quality and fit, since it is possible that some techniques might work better for certain musical tracks, for example bass vs. melody. Melodic parts are always derived from a global musical scale, ensuring harmonic unity. Here is a brief overview of the generative techniques I used.

- Random: A simple algorithm that just randomly selects notes from a given scale.

- L-system: Generating melodies from arbitrary grammars.

- Arpeggio: Follows the scale in a specified direction (up, down, and combinations).

- Perlin: Using Perlin noise to generate continuous melodic lines.

Despite all the work up to this point, I felt like I had been missing a sense of direction for this project. I knew roughly where I wanted to be but could not find an exciting and interesting path to get there, getting stuck with too much technical implementation and too little conceptual incentive. However, all I needed was…

A hard reset

Wednesday, February 14, 20:55. As my Cheetos-stained fingers type these words, I finally got a sense of direction. My original plan for today was to test a build of the project, to see if the audio system I was using would actually work. I never really doubted that. In the worst case, a Windows build would always work.

Welp, that was not the case, meaning most of the implementation work of the past weeks was for nothing…?

Not quite. Enter again, Unreal. I had originally planned to use it, mainly because its MetaSounds system is the only real-time audio solution that is integrated within a game engine, and quite similar to PureData or MaxMSP that I have extensively worked with before. However, I didn’t feel confident enough to use Unreal on a new, time-constrained project. What mainly stopped me was that I had to create a generative configuration interface, as seen above, and I just couldn’t wrap my head around connecting the Unreal GUI builder and MetaSounds in this short time.

However, I found there are simpler but no less effective ways to control the music. Everything else was actually quite easy to do, after I had already “practiced” using Unreal for a few months. Working on the Unity project had helped my idea to become clearer. Within two days, I was able to whip up a basic set up that would resemble what I already had in Unity, music and all, and then some, all without implementing a GUI.

Reducing complexity

I solved the music configuration issue by moving away from a monolithic structure. Instead of controlling all parameters in a central system concurrently, I’m going to implement different modules that affect individual music parameters. For example, one module could affect rhythmic patterns, based on how the players moves around, another could choose a scale based on the player’s location. In addition, “sound landmarks” that are placed in the environment supply various areas with a sound signature, meant to facilitate player orientation. It is much easier to set up a principal musical structure that is supported by several environmental sound objects, instead of creating and assigning whole sets of configurations per area.

Natural/diegetic sounds?

I have paired these two words to complement each other, only to realize a moment later that diegetic and natural seem almost diametrically opposed to each other. Diegetic is often used in the context of something artificial (i.e., not natural), a human-made medium. For instance, music in a movie can be diegetic and non-diegetic, coming from on- or off-screen. The same can be the case for music in games. A sound may be defined as natural in its diegetic context, such as the howling wind that blows over a meadow, rustling the grass.

To overcome this opposition, let’s frame things. Games are an interactive medium that simulate dynamic worlds, i.e., virtual environments. Since the given virtual environment represents the totality of what exists in that context, would that not have a resemblance of nature? What if we assume this virtual totality as nature, and therefore, all the entities it holds as the natural foundation that a soniferous garden is build upon?

Natural sounds

My concept for environmental sound objects is to combine two sound layers: The first layer would aim to emulate a sound one would generally associate with a natural origin. For example, using noise to generate something that represents waves, or wind. The second layer would present a melodic sound. Melodic sound layers could be harmonically linked to an overall musical scale. Any melodic and rhythmic aspects, both of “natural” and melodic sounds, could be synchronized with a sequencer. This sequencer would control both diegetic and non-diegetic music/sounds. Some of these objects can be the aforementioned sound landmarks, others may hold less meaning but could enhance the environment with dynamic and playful behaviors.

Reflecting on reflection

Tuesday, February 20, 23:20. These things are hard to write. I feel like there is so much (many different concurrent processes) and so little (overall process is incremental) happening at the same time that it becomes difficult to tie the different directions of thought together. This writing is not only meant as a reflection on the development process of my unnamed semester project - work title: Soundgarden - it is also a reflection on how to polish my writing to the point that reflection becomes clearer. Originally, I had wanted to put all the above text into one coherent story before I decided to roughly separate it according to the featured themes and chronology. That seemed to have made the text a lot more coherent already. I would expect that further writings will help in improving coherence and quality further. But is coherence and quality all I want in a text? I feel like I am constantly forgetting relevant things, since I’m dealing with so many topics. So there should also be some form of completeness, no step left out in documentation. I think that writing a section at the end of a work day could help improve my process.

Music heard while working on the project

I often listen to music while I work. I am putting together a playlist of fitting and inspiring music that I come across as I work on the project. The tunes in the list at the time of writing this feature repetitive structures that may lend themselves well to be adapted algorithmically.

Here it is. It will grow as I continue to work on this project.

Some of the featured tracks were at least partially recorded using modular synthesizers. There is a striking similarity between modular synthesis, MetaSounds, and pretty much any visual programming system but that’s a topic for another time…

Upcoming

Next steps:

- Make the music pleasant to listen to

- Implement gameplay: Hiding and finding objects, the overall music is meant to affectively influence players, environmental sounds may hold clues about the hidden object’s positions.

Further considerations:

- How does the project connect to Schafer’s Soniferous Garden?

- What principles of acoustic design apply here?

- What does it mean in the bigger picture of game soundscapes and how does it relate, or link back to real environments?